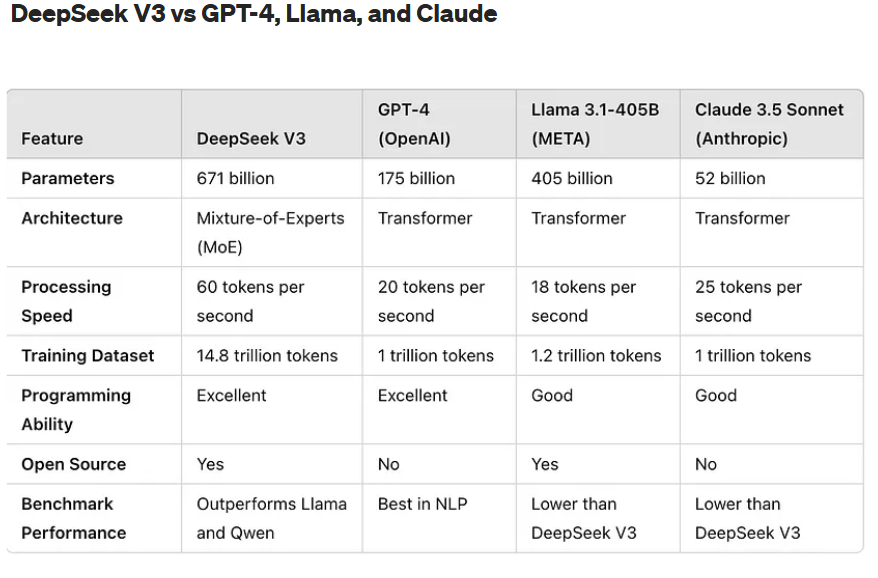

In the world of Artificial intelligence(AI) – DeepSeek V3 emerged, making waves in the AI world by surpassing both GPT-4 and Llama in critical benchmarks. Powered by Mixture-of-Experts (MoE) and 671 billion parameters, DeepSeek V3 offers unparalleled speed, efficiency, and accuracy, redefining the limits of AI performance.

This blog will discuss the key elements that have made DeepSeek V3 a success, and will compare it to GPT-4 and Llama.

Key Points for the Success of DeepSeek V3

The success of DeepSeek V3 can be attributed to several critical points, each of which enhances its performance and makes it a standout model in the field of AI.

Mixture-of-Experts (MoE) Architecture

- DeepSeek V3 is built on Mixture-of-Experts (MoE), a revolutionary approach to model architecture. Unlike traditional models that use all parameters for each token processed, MoE only activates a subset of parameters, significantly reducing computational overhead. This enables DeepSeek V3 to handle complex tasks while using fewer resources, resulting in faster processing speeds and greater efficiency.

- By having multiple experts that specialize in different aspects of language processing, DeepSeek V3 can better handle specialized tasks and optimize performance across a wide range of applications.

Scalability and Training on a Massive Dataset

- DeepSeek V3 was trained on 14.8 trillion tokens, a dataset far larger than what many other models, including GPT-4, have used. This scale of data allows the model to learn from an extensive variety of sources, making it more capable of handling diverse tasks, from automated coding to natural language understanding in highly specialized fields.

- The vast amount of training data also ensures that DeepSeek V3 can understand complex language patterns, contextual nuances, and domain-specific knowledge, which are critical for delivering accurate, context-aware outputs.

Exceptional Processing Speed

- One of the key achievements of DeepSeek V3 is its processing speed. At 60 tokens per second, it is significantly faster than models like GPT-4 and Llama, which process data at a rate of 20 and 18 tokens per second, respectively. This speed is particularly valuable for real-time applications such as virtual assistants, automated customer support, and real-time data analytics.

- The combination of MoE and efficient resource utilization allows DeepSeek V3 to handle large volumes of data quickly, making it a prime candidate for industries that require both speed and accuracy.

Open-Source Accessibility

- Another crucial factor in DeepSeek V3’s success is its open-source nature. By making the model available to the public, DeepSeek has fostered collaboration within the AI community, enabling developers, researchers, and organizations to use, modify, and contribute to the model. This openness accelerates innovation and allows for the rapid adoption of DeepSeek V3 across different sectors.

- Open-source AI also allows smaller companies and academic institutions to leverage state-of-the-art technology without the financial barriers posed by proprietary models, democratizing access to powerful AI tools.

Focus on Efficiency and Resource Optimization

- DeepSeek V3’s ability to deliver high performance with low resource consumption is a key element of its success. While traditional AI models require significant hardware infrastructure to achieve similar results, DeepSeek V3’s MoE architecture allows it to be run on more resource-efficient systems, reducing both operational costs and environmental impact.

- This focus on sustainable AI is increasingly important as AI models become more powerful and widely used, and it sets DeepSeek V3 apart from other models that are energy-intensive and difficult to scale.

Efficiency and Collaboration in AI

DeepSeek V3 has highlighted several key lessons for the future of AI development:

Efficient Resource Utilization: DeepSeek V3 demonstrates that efficiency is just as important as performance. The use of MoE and other optimization techniques ensures that AI models can perform complex tasks without requiring vast amounts of computational power.

Importance of Speed: Speed is a critical component of success in real-time AI applications. DeepSeek V3’s impressive processing speed shows that faster AI systems are not only desirable but also feasible with the right architectural approach.

The Power of Open-Source Collaboration: DeepSeek V3’s success is also driven by its open-source nature. Open-source models foster community collaboration and innovation, leading to faster advancements and broader accessibility for developers and companies worldwide.

Future of AI

As AI continues to evolve, DeepSeek V3 sets the stage for the next generation of AI models. The future of AI will likely involve models that are even more efficient, capable of handling larger datasets, and able to perform more sophisticated tasks across industries.

DeepSeek V3’s Lasting Impact: DeepSeek V3 will continue to influence AI development for years to come, particularly in open-source applications. Its impact will be seen in fields like healthcare, cybersecurity, and autonomous systems, where speed and efficiency are essential.

Open-Source AI’s Future: As the open-source model gains momentum, more companies and researchers will turn to open-source tools like DeepSeek V3. This trend will lead to greater collaboration and more inclusive AI development across the globe.

AI’s Role in Industry and Society: AI’s potential to solve complex problems in fields such as climate change, healthcare, and education will continue to expand. The next decade will see AI as a key player in global problem-solving, driving productivity and improving quality of life.

Reference Links

DeepSeek V3’s Launch and Benchmarking: VentureBeat

DeepSeek V3 on GitHub: GitHub — DeepSeek V3

Read More blogs – here